Why go open?

I think the first time I ranted publicly about publishing was during Epidemics6. At the time, I was making a plea for open software practices in science, which most people seem now to agree on: transparent, free, reproducible science seems to be the way forward. Practicing my talk for this conference organised by a large publishing company, I realised these ideals were thrown away in one of the most important aspects in a researcher’s life, the publication of peer reviewed papers. Back then, I tried to outline quickly why I thought we could do better and added a few slides to my talk, which then caused a bit of laughter, and some interesting follow-up conversations. In this post I’ll try to briefly explain why I think the current dominating publishing pratice is not working, and how we can make it better.

Scientific publishing: a terrible business model

This is the easy part. While open-access journals are becoming more common, there is still a large number of journals charging for accessing their content. In short: you can access to science if you can afford it. This is the very contradiction of the idea of a science open to all. It is also poor value for money for society as a whole, due to a fairly immoral business model:

- scientists pay to provide the very material publishers make money from

- scientists then pay again, via their academic institutions most of the time, to gain access to content

- the heavy lifting in the publishing industry (reviewing, editing) is made by scientists, most of the time working for free

- as science is often publicly funded, this is effectively a stream of money going from taxpayers to private companies, without any benefit for society; it only makes science less accessible

This model is quite hard to defend whilst keeping a straight face. In fairness, it is also probably dying, as open-access journals are becoming more popular. I would argue open-access good, but open publishing (i.e. published reviews, disclosed referees) is better.

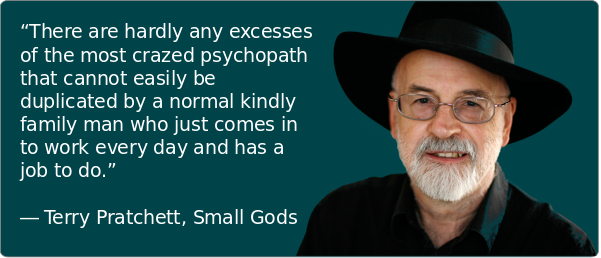

Closed review: a culture of abuse

Social psychology has studied intensively the determinants of abusive behaviour, especially in relation to hierarchical dynamics and authority (e.g. Haney, Banks, and Zimbardo 1972, Milgram (1965), Milgram (1963)). See Zimbardo (2011) for an accessible introduction to the field, and (Aronson 2003) for a deeper introduction to social psychology in general.

In (very) short: under specific circumstances, most people will engage in abusive behaviours.1 Various external factors may shape such circumstances, but amongst the most common one we often find:

- being in a position of power

- impunity

- having a tier holding authority held responsible for the perpetrator’s actions

- deriving a personal gain from the abuse

- having been a victim of abuse yourself

Now, if you try thinking about these in an anonymous reviewing process, you will find all the of above apply. Referees are in a position of power with respect to authors. Anonymity gives them impunity. An editor is officially in charge. Referees often review work of their perceived competitors, so they have an interest in stalling or blocking their work. And everyone who ever submitted a paper has had some horror stories about poor or unfair reviewing: a paper half read by a referee who asks for material already present, insulting or derogatory comments, stalling papers, and unsubstantiated claims, i.e. not passing the basic test of standard scientific practices.

Open review as a step forward

Most of the factors described above hinge on the fact that referees are anonymous, and usually not held accountable for their work as a referee. The solution is an easy one: make the reviewing itself an integral part of transparent scientific practices. Publish reviews alongside papers, as well as several revisions of the manuscript so that one could track how things evolved during the peer review process. Derive metrics to reward constructive and helpful reviews, and make it part of the way scientists are evaluated (is there an R-index yet?).

I won’t claim this system is without fault, but so far every argument I have heard against it have a far worse counterpart in the existing system. Open review is common practice in software development (code reviews) where it is a very useful instrument for improving work collaboratively. And an increasing number of journals such as F1000 and BMC are now using open reviews. In the age of open data, open software and open science, fully open publishing seems to be the only reasonable way forward.

References

Aronson, E. 2003. Readings About the Social Animal. Macmillan.

Haney, C, C Banks, and P Zimbardo. 1972. “Interpersonal Dynamics in a Simulated Prison.” Stanford Univ Ca Dept Of Psychology.

Milgram, S. 1963. “BEHAVIORAL STUDY OF OBEDIENCE.” J. Abnorm. Psychol. 67 (October): 371–78.

———. 1965. “Some Conditions of Obedience and Disobedience to Authority.” Hum. Relat. 18 (1). SAGE Publications Ltd: 57–76.

Zimbardo, P. 2011. The Lucifer Effect: How Good People Turn Evil. Random House.

at this stage most people think ‘not me’ (I did), which usually means ‘especially you’↩